From 32028d004b437e2778efb0532bd736a7c44d2db8 Mon Sep 17 00:00:00 2001

From: Adrian Kummerlaender

Date: Thu, 23 Sep 2021 23:19:45 +0200

Subject: WIP: Article on noise in ray marching

---

articles/2021-09-26_noise_and_ray_marching.org | 254 +++++++++++++++++++++++++

1 file changed, 254 insertions(+)

create mode 100644 articles/2021-09-26_noise_and_ray_marching.org

(limited to 'articles/2021-09-26_noise_and_ray_marching.org')

diff --git a/articles/2021-09-26_noise_and_ray_marching.org b/articles/2021-09-26_noise_and_ray_marching.org

new file mode 100644

index 0000000..c5ae921

--- /dev/null

+++ b/articles/2021-09-26_noise_and_ray_marching.org

@@ -0,0 +1,254 @@

+* Noise and Ray Marching

+[[https://literatelb.org][LiterateLB's]] volumetric visualization functionality relies on a simple ray marching implementation

+to sample both the 3D textures produced by the simulation side of things and the signed distance

+functions that describe the obstacle geometry. While this produces surprisingly [[https://www.youtube.com/watch?v=n86GfhhL7sA][nice looking]]

+results in many cases, some artifacts of the visualization algorithm are visible depending on the

+viewport and sample gradients. Extending the ray marching code to utilize a noise function is

+one possibility of mitigating such issues that I want to explore in this article.

+

+While my [[https://www.youtube.com/watch?v=J2al5tV14M8][original foray]] into just in time visualization of Lattice Boltzmann based simulations

+was only an aftertought to [[https://tree.kummerlaender.eu/projects/symlbm_playground/][playing around]] with [[https://sympy.org][SymPy]] based code generation approaches I have

+since put some work into a more fully fledged code. The resulting [[https://literatelb.org][LiterateLB]] code combines

+symbolic generation of optimized CUDA kernels and functionality for just in time fluid flow

+visualization into a single /literate/ [[http://code.kummerlaender.eu/LiterateLB/tree/lbm.org][document]].

+

+For all fortunate users of the [[https://nixos.org][Nix]] package manager, tangling and building this from the [[https://orgmode.org][Org]]

+document is as easy as executing the following commands on a CUDA-enabled NixOS host.

+

+#+BEGIN_SRC sh

+git clone https://code.kummerlaender.eu/LiterateLB

+nix-build

+./result/bin/nozzle

+#+END_SRC

+

+** Image Synthesis

+Let $C$ be the sample density along a ray $r$ with length $L$ and given an absorption $\mu$. Then

+\[ C(r) = \int_0^L c(x) \mu(x) \exp\left(-\int_0^x \mu(t) dt\right) dx \]

+is the volume rendering equation yielding per-pixel density values that are easily mapped to

+some convenient color palette.

+

+#+BEGIN_EXPORT html

+

+#+END_EXPORT

+

+I.e. we integrate over the values $c(x)$ along the ray weighted by the current absorption $\mu$

+and the accumulated absorption up to the current point. This way samples that are closer to

+the view origin will be more prominent than samples of the same magnitude that are farther

+away which of course is also a rough approximation of how a real volume behaves, hence

+the name.

+

+In practice this integral is approximated by the sum

+$$C(r) = \sum_{i=0}^N c(i \Delta x) \alpha (i \Delta x) \prod_{j=0}^{i-1} \left(1 - \alpha(j\Delta x)\right)$$

+for step width \(\Delta x \in \mathbb{R}^+\) in the most basic case.

+

+This approach may be extended arbitrarily, e.g. it is only a couple of phase functions

+away from being able [[https://tree.kummerlaender.eu/projects/firmament/][recover the color produced by light travelling through the participating media that is our atmosphere]].

+

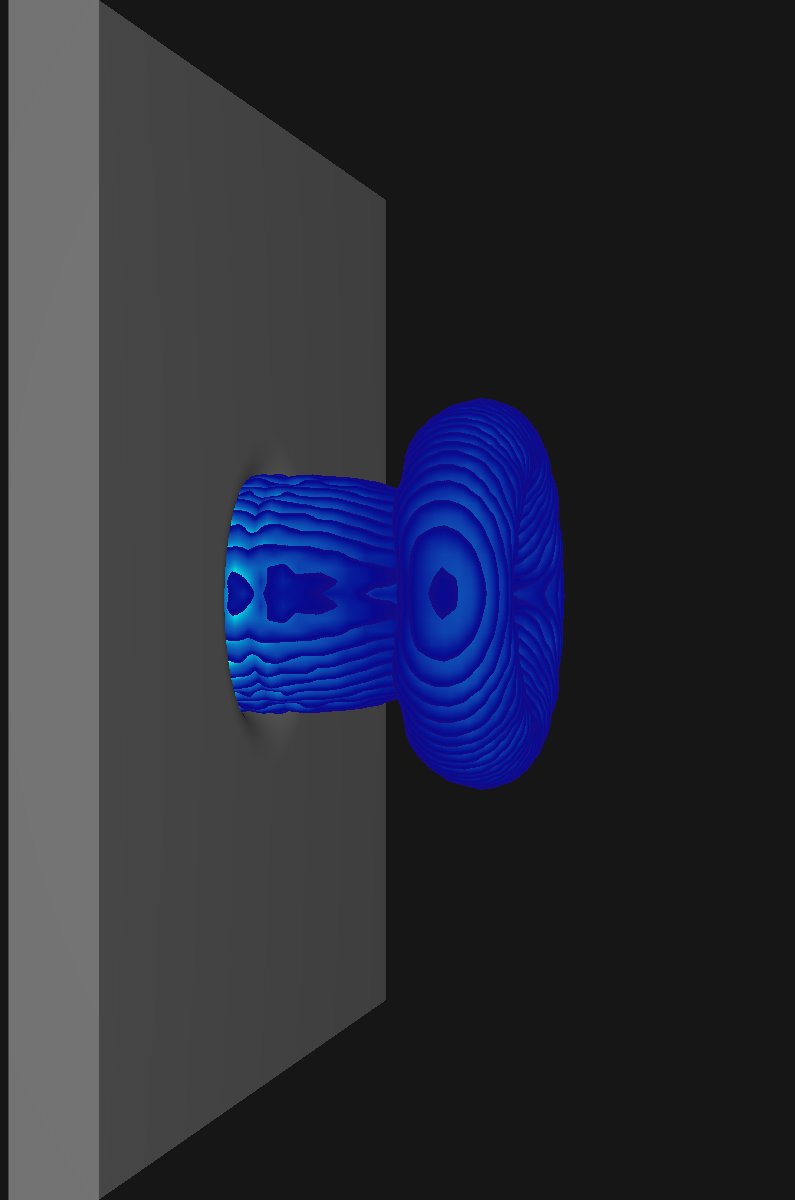

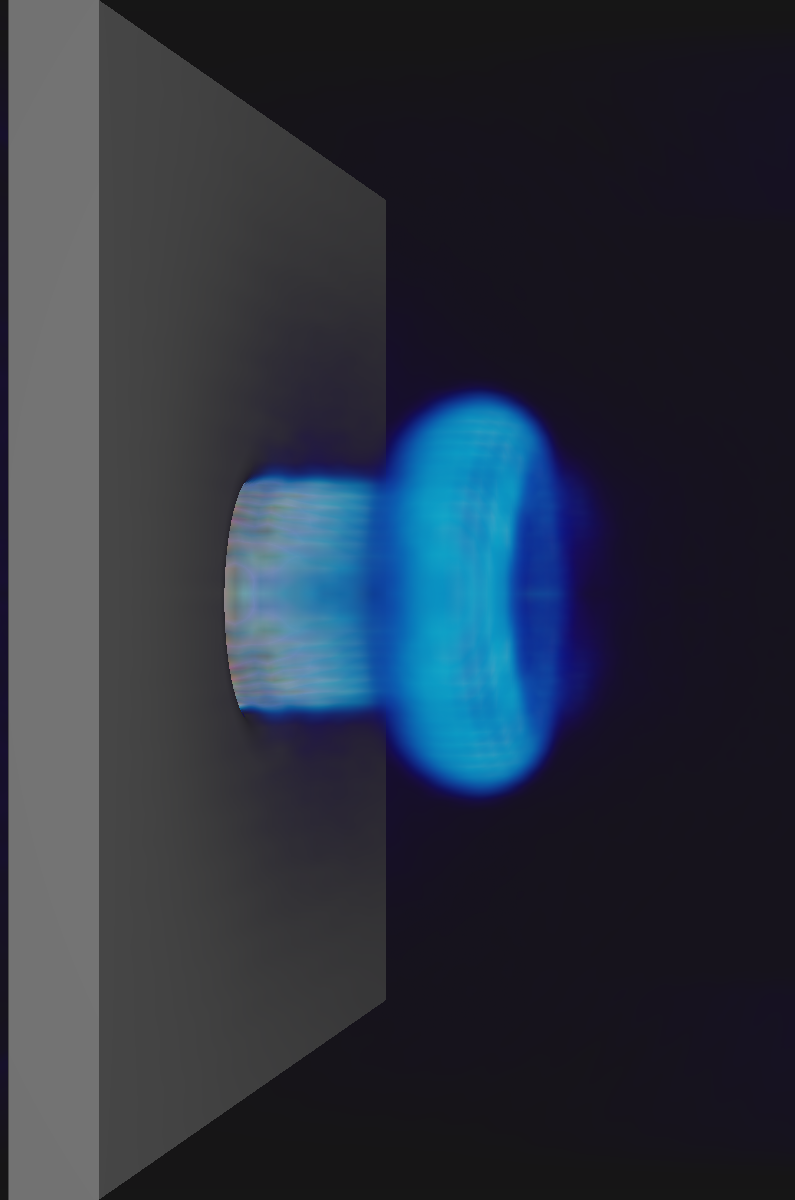

+** The Problem

+There are many different possibilities for the choice of samples \(c(x)\) in the volume rendering

+equation. E.g. velocity and curl norms, the scalar product of ray direction and shear layer

+normals or vortex identifiers such as the Q criterion

+\[ Q = \|\Omega\|^2 - \|S\|^2 > 0 \text{ commonly thresholded to recover isosurfaces} \]

+that contrasts the local vorticity and strain rate norms. The strain rate tensor \(S\) is easily

+recovered from the non-equilibrium populations \(f^\text{neq}\) of the simulation lattice — and is in

+fact already used for the turbulence model. Similarly, the vorticity \(\Omega = \nabla \times u\) can be

+computed from the velocity field using a finite difference stencil.

+

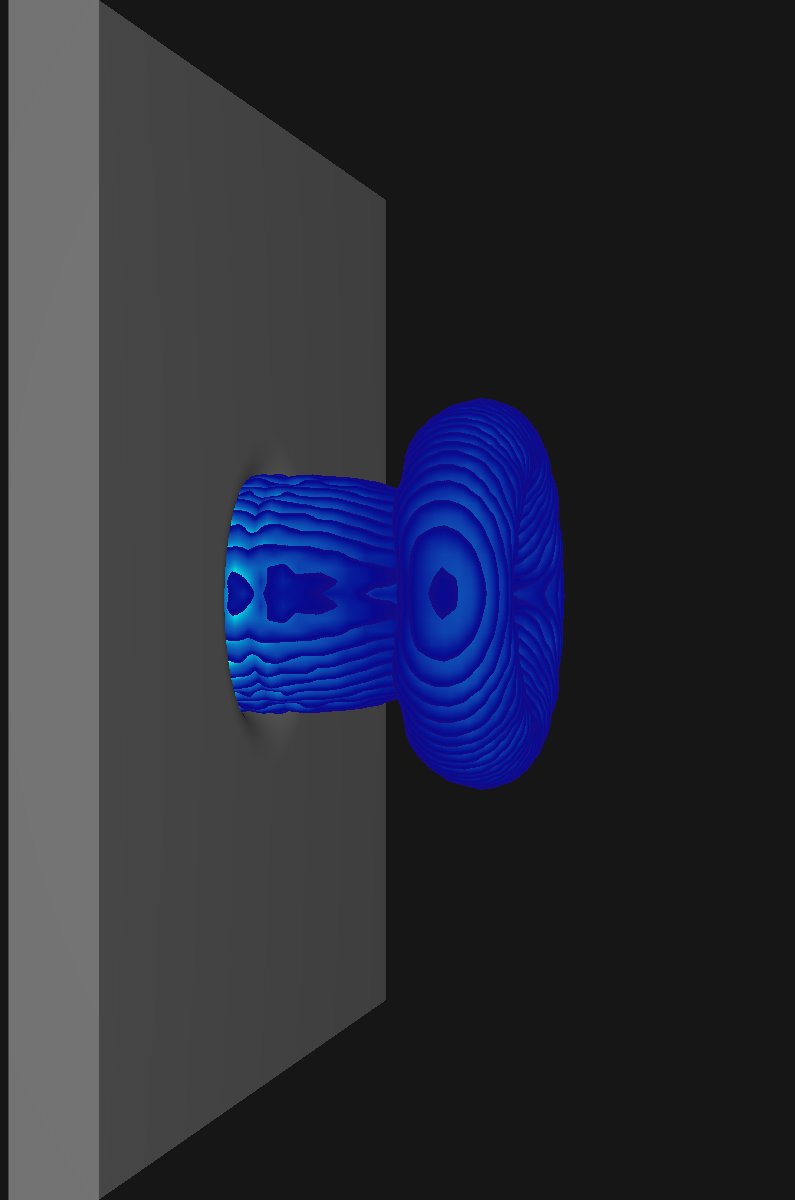

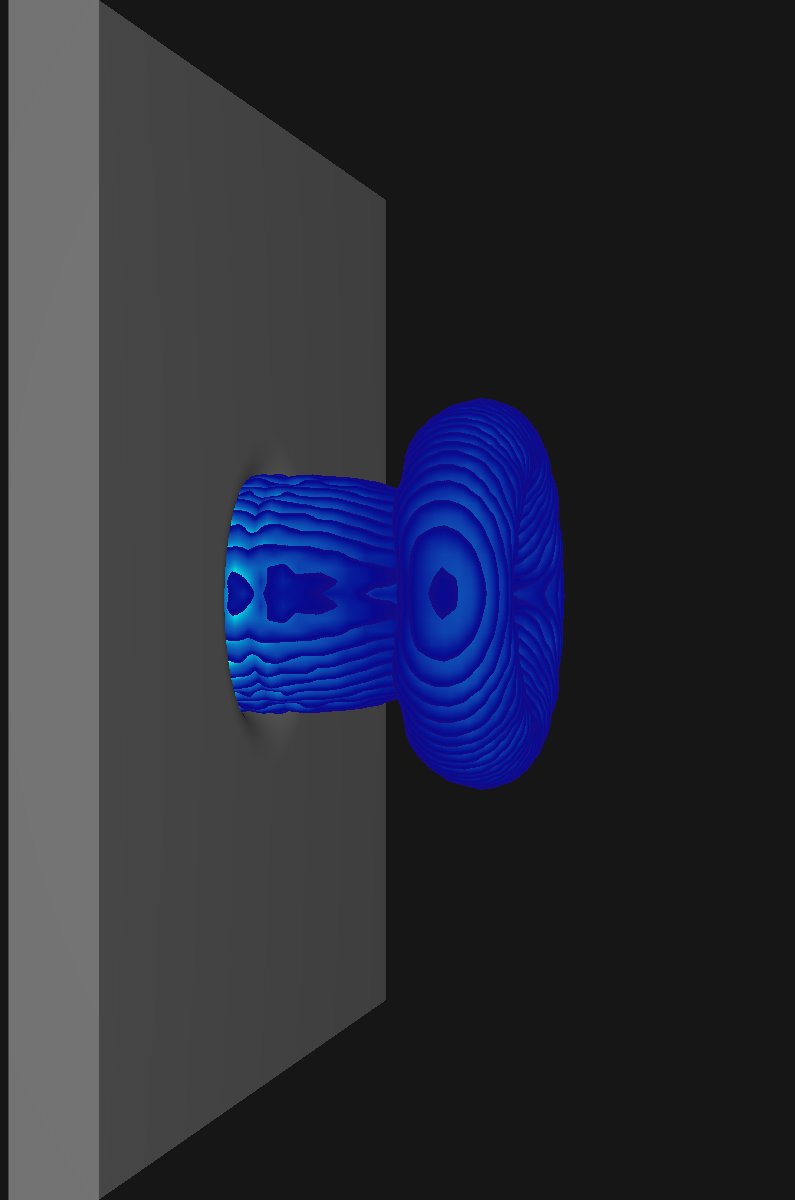

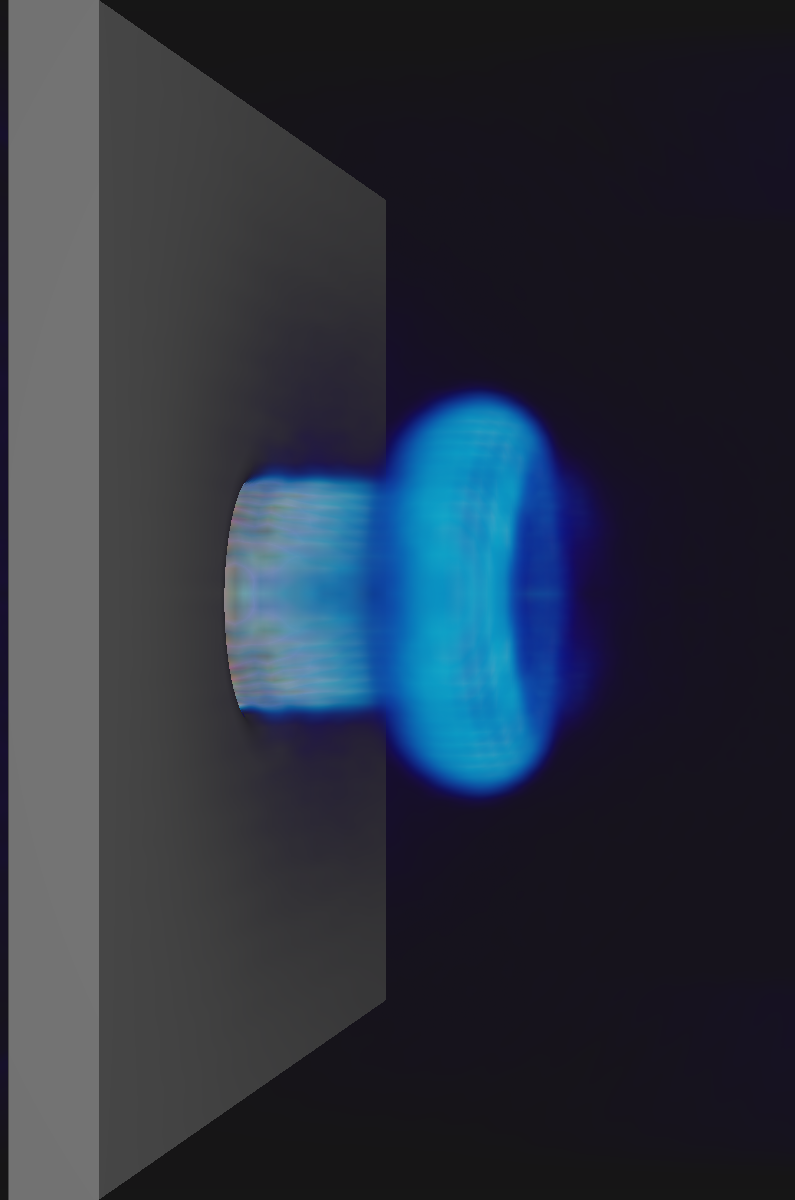

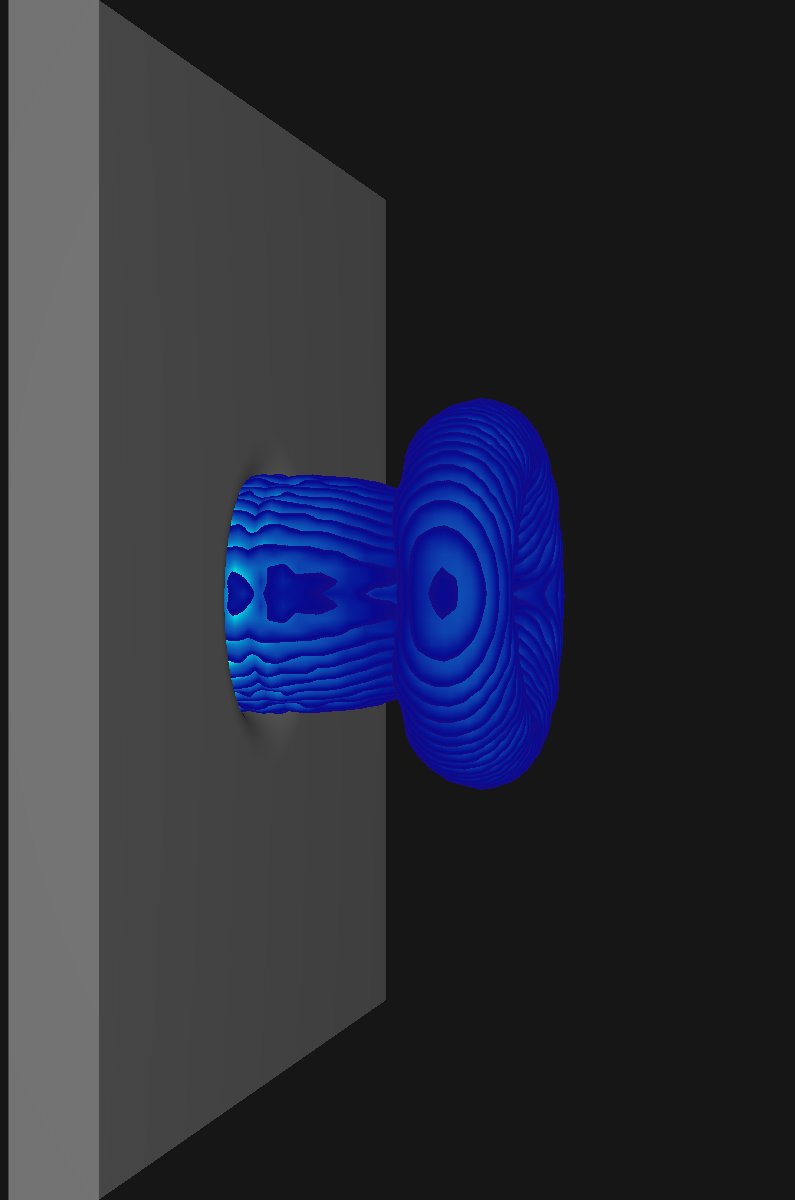

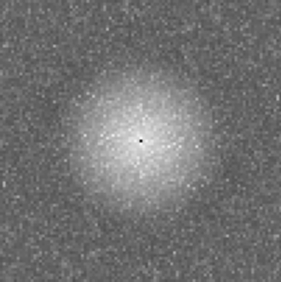

+The problem w.r.t. rendering when thresholding sampling values to highlight structures in the flow

+becomes apparent in the following picture:

+

+#+BEGIN_EXPORT html

+

+

+

Q Criterion

+

+

+

+

Curl Norm

+

+

+

+

+

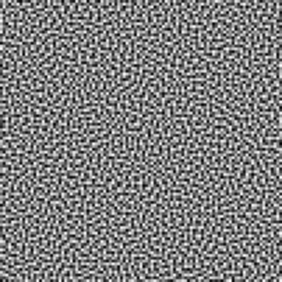

Blue noise texture

+

+

+

+

Fourier transformation

+

+

+

+

+

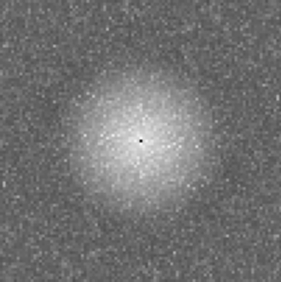

White noise texture

+

+

+

+

Fourier transformation

+

+

+

+

+

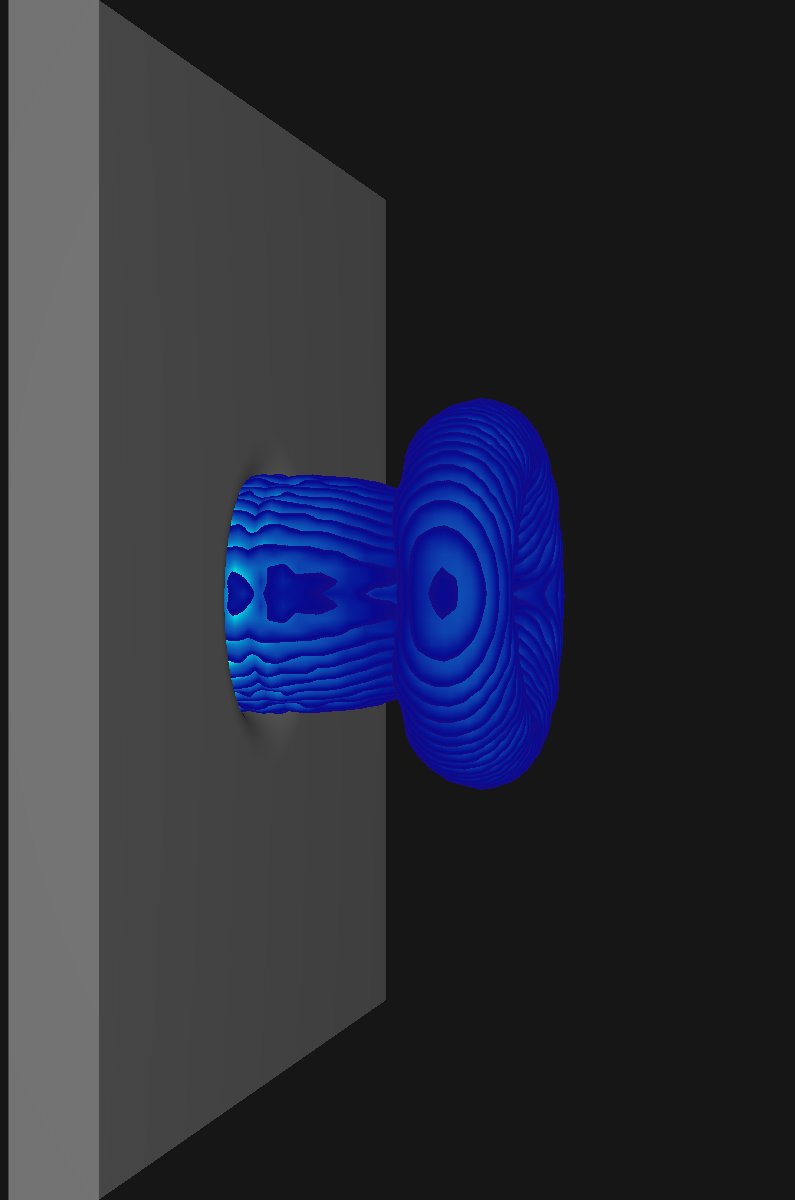

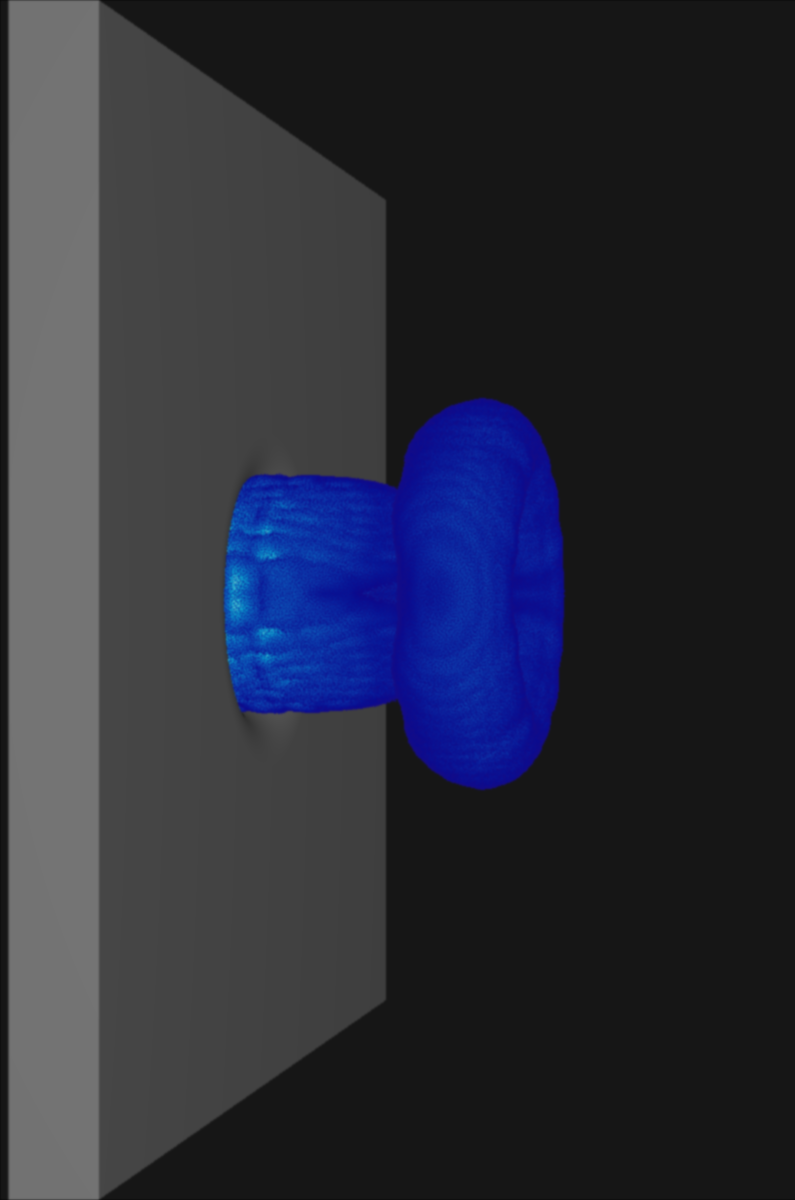

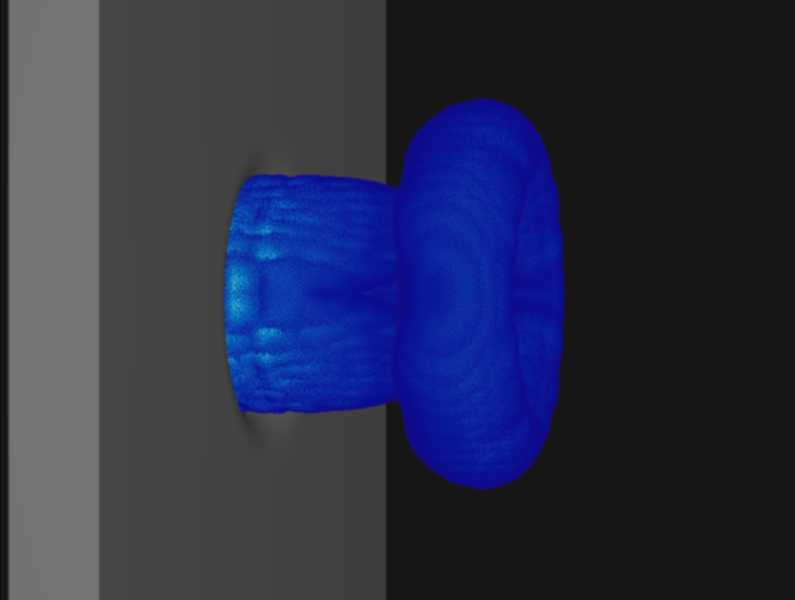

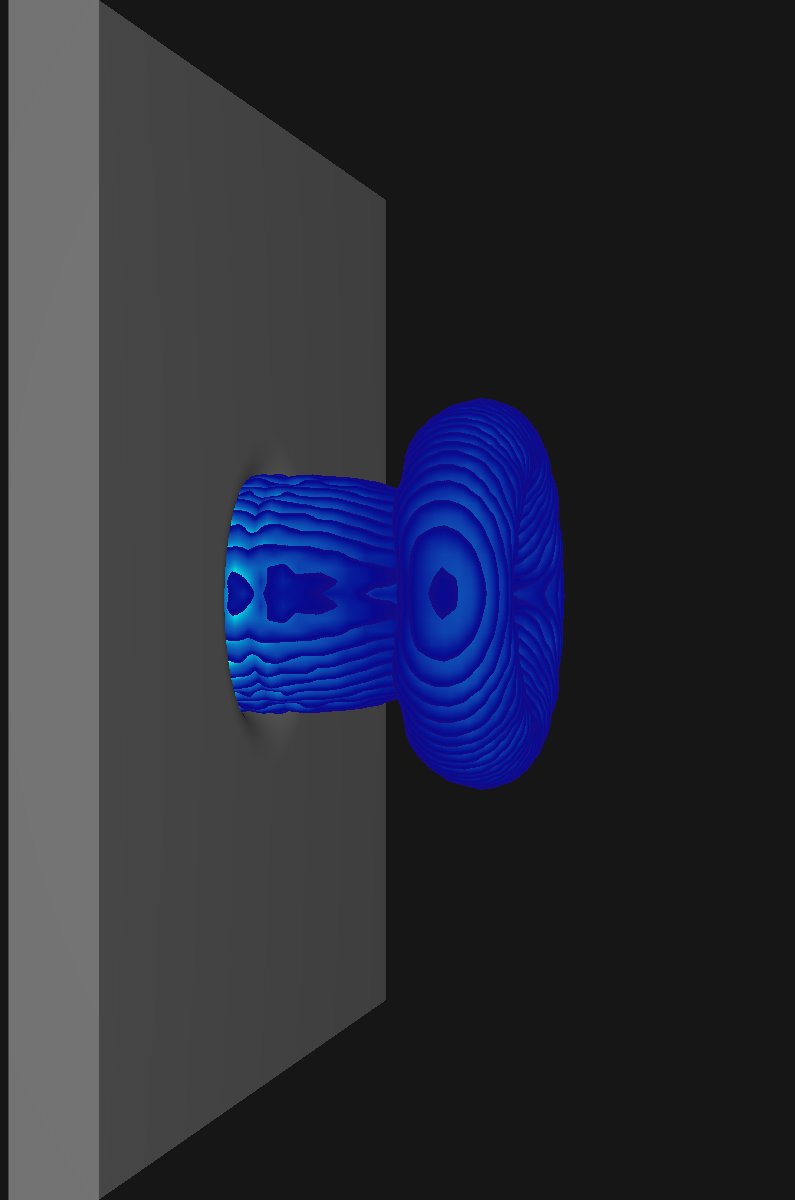

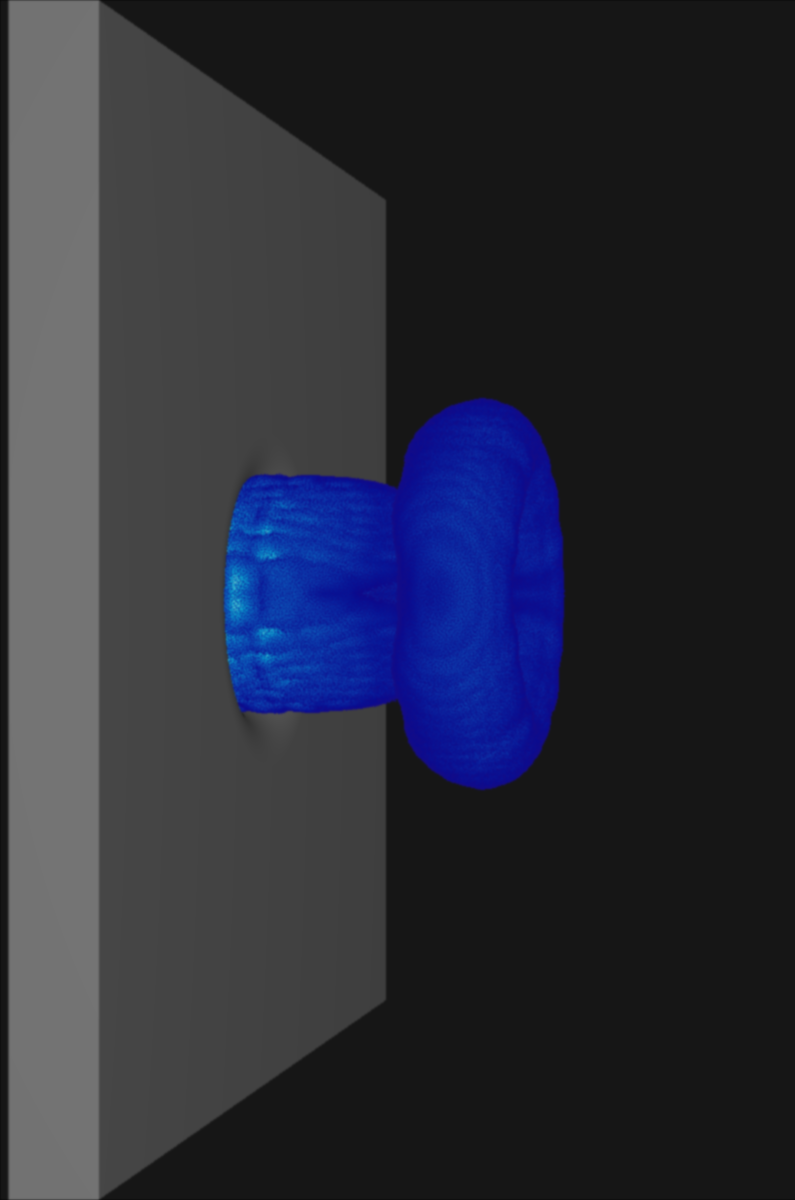

Simple ray marching

+

+

+

+

Ray marching with blue noise jittering

+

+

+

+

+

Blue noise

+

+

+

+

White noise

+

+

+

+

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+ +

+